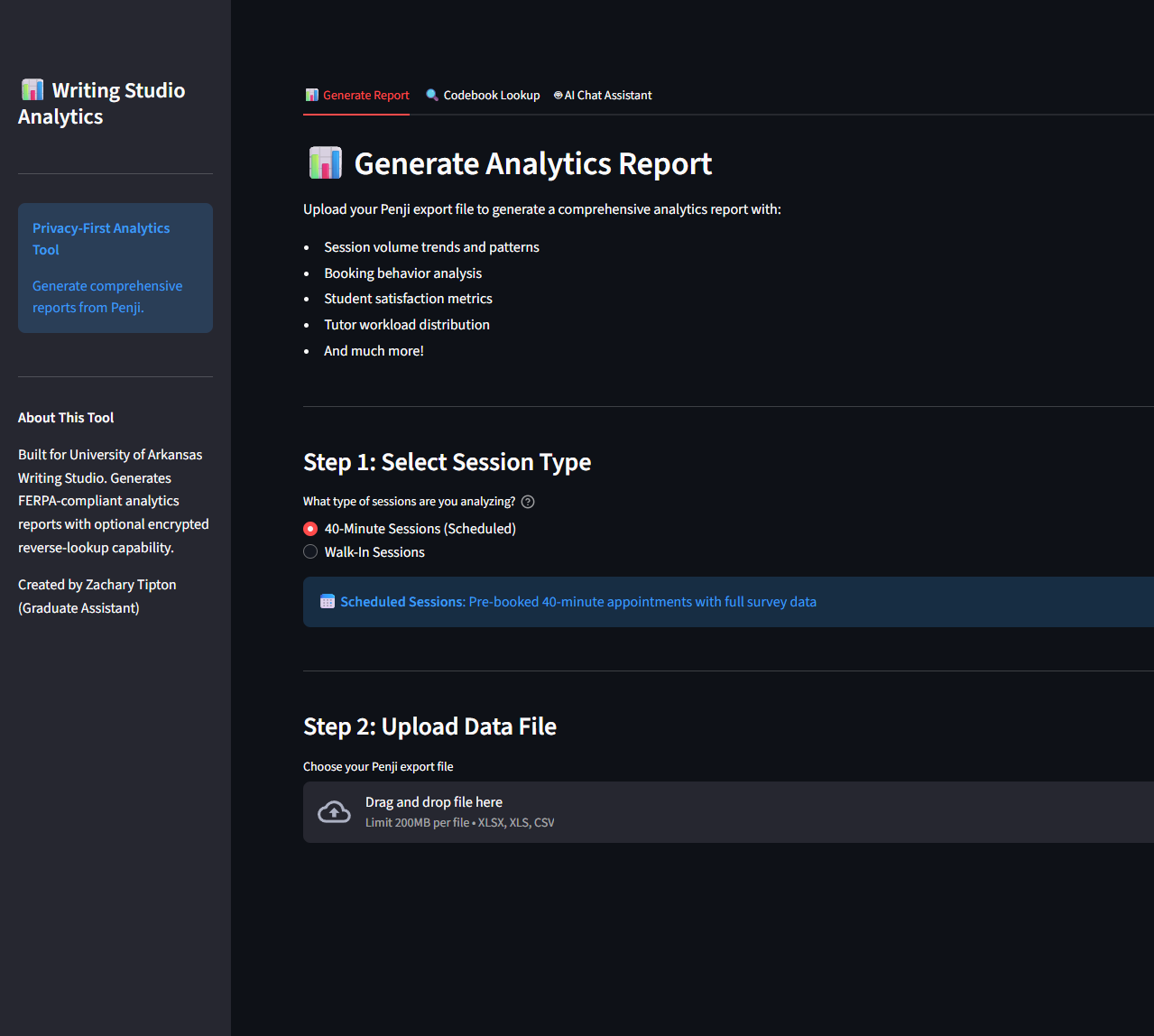

Problem

University writing centers collect session data through platforms like Penji, but analyzing that data means handling FERPA-protected student records. Cloud-based tools introduce compliance risk. Manual analysis is slow and inconsistent. The Writing Studio needed a way to generate standardized analytics reports without student data ever leaving the machine.

Solution

A self-contained Streamlit application that:

- Ingests Penji CSV/Excel exports and produces multi-section PDF reports with anonymized datasets

- Detects and removes all personally identifiable information automatically

- Provides an optional AI chat interface powered by a local LLM (no cloud APIs)

- Ships as a portable package — no Python installation required, runs from a USB drive or shared folder

Key Features

Report Generation

- Dual-mode support: Separate cleaning pipelines and report templates for 40-minute scheduled appointments and walk-in sessions

- Auto-detection: Identifies session type from column patterns in the uploaded file

- 11-section PDF reports (scheduled) covering booking behavior, attendance outcomes, student satisfaction, tutor workload, session content, incentive analysis, data quality, and semester comparisons

- Anonymized CSV export alongside the PDF for further analysis

Privacy & Security

- Two-layer PII detection: Exact column name matching + regex pattern scanning for emails, SSO IDs, and names

- SHA256-based anonymization: Deterministic anonymous IDs (

STU_04521,TUT_0842) with collision handling - Encrypted codebook: Optional reverse-lookup file using PBKDF2-HMAC-SHA256 key derivation (100,000 iterations) and Fernet symmetric encryption. Requires a 12+ character password to decrypt, allowing supervisors to identify flagged individuals when needed

- Response filtering: AI chat responses are scanned for PII leakage before display

AI Chat Assistant

- Model: Gemma 3 4B Instruct (Q4_0 quantized GGUF, ~3 GB)

- Inference: llama-cpp-python running locally on CPU (with optional CUDA/Metal GPU acceleration)

- Safety: Weighted input scoring system with 78 off-topic patterns, 43 jailbreak detection regexes, and post-generation response filtering

- Code execution: LLM can generate and run pandas/DuckDB queries against the loaded dataset in a restricted sandbox

- Query logging: All prompts logged with timestamps and accept/reject status to

logs/queries.log - Graceful degradation: If the model file isn't downloaded, the tab shows a one-click download button with a progress bar. If the inference library isn't installed, the tab explains what's needed. The rest of the app works regardless.

Analytics Engine

Over 1,300 lines of metrics calculations covering:

- Booking behavior: Lead time distributions (same-day through 7+ days), temporal patterns by day and hour, day/time heatmaps

- Attendance: Completion, cancellation, and no-show rates — overall, by location (in-person vs. Zoom), by day of week, and by semester

- Student satisfaction: Pre/post confidence distributions, confidence change deltas, satisfaction ratings (1-7 scale), tutor rapport and session quality scores

- Tutor workload: Sessions per tutor, average session length, balance analysis

- Statistical outlier detection: IQR method with NaN preservation — outliers are removed from calculations but legitimate missing data is kept

Tech Stack

| Layer | Technology | Role |

|---|---|---|

| UI | Streamlit | Web interface, file uploads, tab navigation |

| Data | pandas, NumPy, DuckDB | Cleaning, transformation, fast SQL-like queries |

| Visualization | Matplotlib, Seaborn, Altair | Charts for PDF reports and interactive display |

| ReportLab | Multi-page report generation with embedded charts | |

| Privacy | cryptography, hashlib | Anonymization and encrypted codebook |

| AI | llama-cpp-python, Gemma 3 4B | Local LLM inference, no external API calls |

| Distribution | Embedded Python 3.11, batch scripts | Portable package, no installation required |

Architecture

The project follows a modular structure with clear separation of concerns:

app.py Entry point (Streamlit app, ~900 lines)

src/

core/

data_cleaner.py 10-step scheduled session cleaning pipeline

walkin_cleaner.py Walk-in session cleaning pipeline

metrics.py Scheduled session analytics (1,384 lines)

walkin_metrics.py Walk-in specific metrics

privacy.py PII detection, SHA256 anonymization, Fernet encryption

location_metrics.py Location-based analytics

ai_chat/

chat_handler.py Orchestrates queries, validation, code execution

llm_engine.py Gemma model loading and inference wrapper

safety_filters.py Input validation + response filtering

code_executor.py Sandboxed pandas/DuckDB code execution

query_engine.py Natural language to SQL translation via DuckDB

prompt_templates.py System prompts built from data context

setup_model.py Model download and system requirements check

visualizations/

report_generator.py Scheduled session PDF report (11 sections)

walkin_report_generator.py Walk-in session PDF report (8 sections)

charts.py Reusable chart functions

utils/

academic_calendar.py Semester detection from dates

Design Decisions

Local-only inference

Student data never leaves the machine. The LLM runs on CPU via llama-cpp-python rather than calling an external API, which eliminates FERPA concerns around data transmission.

Deterministic anonymization

SHA256 hashing produces the same anonymous ID for the same email across runs, so longitudinal analysis works without storing raw identifiers.

Graceful degradation

Every optional feature (AI chat, walk-in mode, GPU acceleration) disables itself cleanly if its dependencies are missing, rather than crashing. The app always works at its core: upload data, get a report.

Portable Python

The target user has no development tools and limited admin access on a university-managed machine. Embedding Python and all dependencies in the distribution folder avoids installation entirely.

Weighted safety scoring

Rather than a simple keyword blocklist, the AI input validator uses a scoring system (+2 for data-relevant terms, -3 for off-topic, -5 for harmful) so that a query mentioning both data terms and an incidental flagged word isn't falsely rejected.

Distribution

The app ships as a portable folder (~600 MB zipped, no model) that runs on any Windows machine without Python installed:

WritingStudioAnalytics/ python/ Embedded Python 3.11 + all dependencies src/ Source code models/ Empty — user downloads AI model here (~3 GB, optional) app.py Main application launch.bat Double-click to start

The AI model is hosted on S3 and can be downloaded from within the app with a single button click and a progress bar. The full analytics and reporting features work without it.

Key Takeaways

This project demonstrates several critical technical capabilities:

- Privacy Engineering: Implementing robust PII detection, anonymization, and encryption to ensure FERPA compliance while maintaining data utility

- System Architecture: Designing a modular, maintainable codebase with clear separation between data processing, visualization, AI components, and utilities

- End-User Focus: Creating a solution that runs without installation or technical knowledge, addressing real user constraints and institutional environments

- Local AI Integration: Successfully integrating local LLM inference with safety systems, code execution capabilities, and graceful degradation patterns

- Analytics Depth: Building a comprehensive analytics engine with 1,300+ lines of metrics calculations covering diverse dimensions of writing center operations